Hi everybody,

in reference to my prior article vSAN – High Available Solution this article will explain the basic architecture and give you some insight into the technical details.

vSAN Basic Architecture

There are several different approaches to vSAN cluster. The decision has to be made stretched/non stretched and direct connect/multi node based on the requirements such as availability/performance/scaleability

Stretched: at least two nodes are stretched over two sites; additional requirements – 10Gbit and <5ms rtt (round trip time) – layer 3 supported; witness required

Non Stretched: all nodes are on the same site

Direct Connect: just two nodes without redundant ethernet switches, witness required

Multi node: (redundant) 10 Gbit infrastructure required; up to 64 nodes in one cluster

Irrespective which deployment type you choose, every node has in common that it contributes local CPU / RAM / Network / Storage resources to the attached cluster.

It is possible to add nodes to the cluster without local storage, which just offer computing.

Network Storage Backend

Rule of thumb is that in hybrid configuration 1 Gbit is supported but not recommended. Opposite is all-flash where 10 Gbit is mandatory. If you use PCIe NVMe card which provide transfer rates over 3500 MB/s you should consider 40 Gbit or even 100 Gbit. Which is at least cheap in 2 node direct connect configurations because you wont need that expensive switch infrastructure.

To deliver the goods we need at least one more VMKernel for the vSAN traffic.

If you need to use a Witness you need to tag you management VMKernel with Witness traffic. So far this is just possible over the command line:

esxcli vsan network ip add -i vmk0 -T=witness

Internal Disk Groups

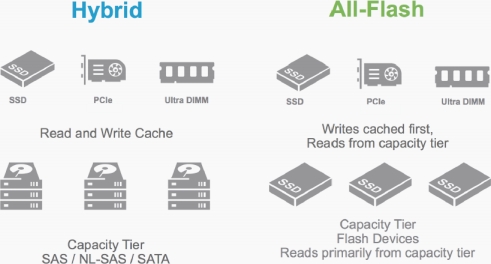

Every disc group consists of exactly one flash caching device (SAS,SATA or NVME) for read caching and write buffering operations – Caching Tier

… and up to 7 flash or spinning disks which offer storage capacity – Storage Tier

Important is that in all-flash configurations the caching tier is 100% write buffer.

In hybrid configurations (capacity tier is HDD) the caching tier is 30% write buffer and 70% read cache.

After creation its formatted in VMFS-L and consequently every Disk group is now a silo for vSAN objects.

In addition the max. amount of disk groups per host is five.

vSAN Datastore and Storage Policy

In order to create a vSAN Datastore you need existing disk groups which will be aggregated together and compose the vSAN Datastore.

Therein objects can be saved fulfilling the requirements which will be mapped in the storage policy.

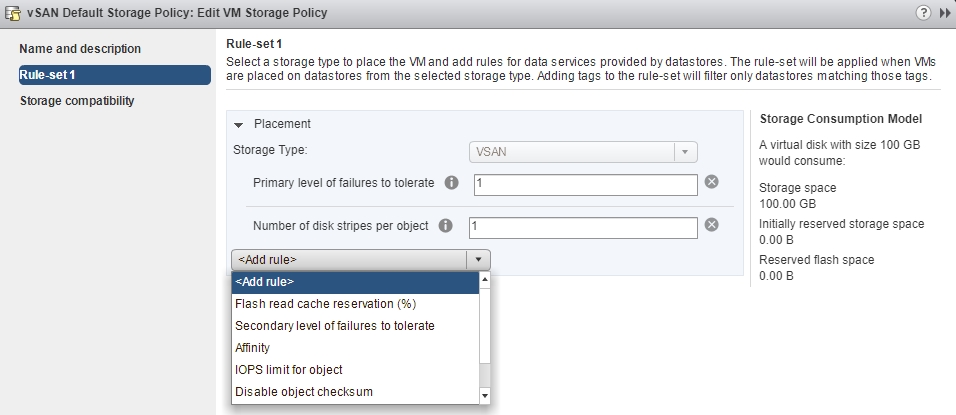

So every virtual machine is attached to a storage policy which contains some basic and optional advanced configuration parameters for storage performance and redundancy.

There a two key parameters which are mandatory in the storage policy.

Stripe width (SW) – object placement striped on different physical disks in order to adapt to performance requirements. Objects sized above 255 GByte are divided automatically into more stripes.

Faults to tolerate (FTT) – compensation of failing nodes, fault to tolerate = 1 means all object information are at least saved into two different disk groups on different hosts. In conclusion you get availability at the cost of storage when you increase this parameter

Additional rules can be defined like “flash read cache reservation” or “IOPS limit for object”

Example vSAN Default Storage Policy:

Best regards

Nicolas Frey