The acronym stands for ‘virtual Storage Area Network’. This is a storage service that is provided by in-kernel communication of ESXi clusters with locally attached disks. Data is stored as policy-based storage objects in the vSAN datastore for consumers, like virtual machines and containers.

I love vSAN especially because of its versatility. Since I first came in touch with this future forward solution in 2014 right after its inception and the end of life of vSA (vSphere Storage Appliance). I thoroughly enjoyed learning the ins and outs of its capabilities. Soon thereafter, we identified the initial use-case within the company, more on that later.

The company provided health services all over Europe with strict SLAs. Our team, the system operation center, was responsible for the IT infrastructure of many locations in Germany and the main data centers.

… consequently, my production-ready journey with this technology began.

My first go-live with vSAN

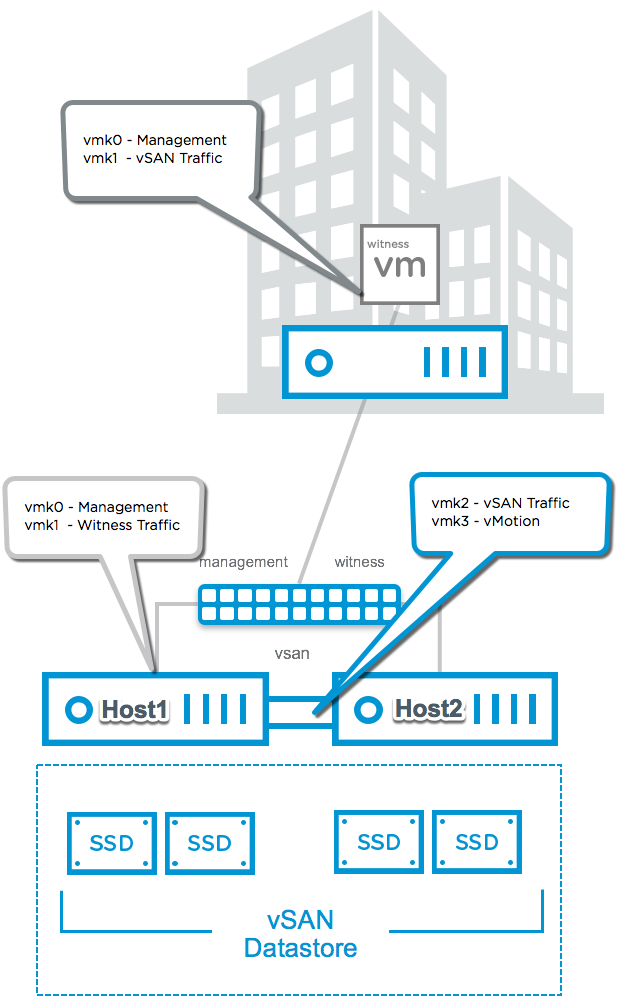

The use-case was a high availability and easily maintainable compute and storage for ROBO (Retail Office Branch Office) laboratory sites. At these sites, there were usually about 5–10 VMs that enabled the business operations, like medical personnel, to do lab-tests. In addition, these sites had a MPLS connection to the central data center. Furthermore, a budget constraint was a high priority in order to keep the costs as low as possible. For a local backup, there were also Synology NAS devices integrated with Veeam Backup and Replication. Requirements for performance were not very high, some servers on the sites were fully on SAS-HDDs. The hybrid architecture in vSAN with SSDs as cache and SAS-HDDs as storage was enough.

The magic word back then was 2-node direct connect. We were thrilled by its outstanding low TCO (Total Cost of Ownership). Based on standard x86 server hardware but very high availability with an RPO of 0 in case of host/rack outage.

In addition, our team was highly skilled in VMware technology and open to new approaches. Furthermore, the good integration into Dell vSAN ready nodes was convincing. Open Mange Server Administrator (OMSA) & iDRAC (Dell Server Remote Board) for the win! OMSA was the software to easily update the servers’ firmware via its remote board (iDRAC). The management software was fully integrated into vCenter / ESXi. It saved a lot of time, even though it had some hiccups.

The witness ESXi virtual machine was hosted in the central datacenter thanks to redundant WAN connections to the RoBo sites, making it easy to accomplish without any availability or security concerns.

One command was frequently used to enable communication between the production hosts & witness:

esxcli vsan network ip add -i vmkx -T witness

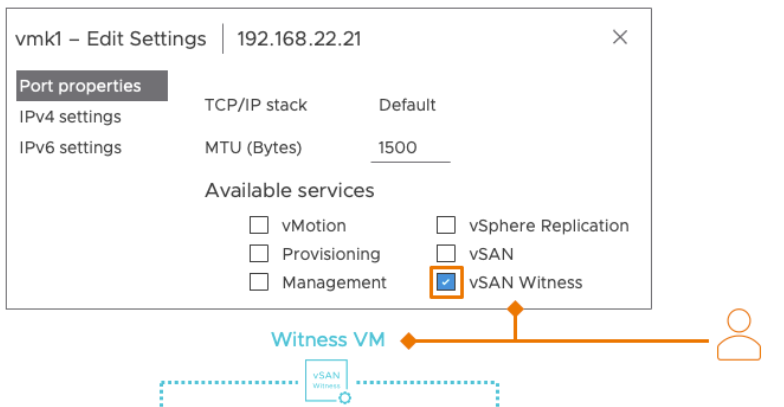

This setting is finally available via GUI.

This new concept was a success story from the beginning, and both business and IT celebrated its reliability and reduced downtimes for updates. A dozen of sites were identified with an ROI and were gifted vSAN clusters, preconfigured in SOC and shipped for fast and easy implementation.

How does vSAN work?

In the past, enterprise storage was mostly dedicated 3-tier architecture because you wanted to share the disk between different workloads. 3-tier means, physical server for workloads, storage fabric for interconnection and finally a storage box to provide capacity through disk management. These were complex and siloed setups, frustrating to maintain, update, and migrate. The teams were also siloed, you had a storage admin, network admin and the server guys. Everyone had mostly exclusive tools and a single pane of glass was an optimistic dream, daily admin life was a tedious and error-prone process. It was the advent of Storage Area Network (SAN), like iSCSI or FC protocol.

In contrast, vSAN is aggregated storage forming a single datastore with local disks (SSD, NVMe & HDD) which are directly attached to the servers. This concept is called hyper converged infrastructure (HCI). Data is stored in objects, which are replicated/Striped for redundancy and performance. All over conventional Ethernet network, all-flash requires at least 10Gbit connections and low latency.

In vSAN both block and file storage is available. Like NFS or SMB to offer fileshares for consumers. Even iSCSI LUNs can be presented to physical servers. A native object storage functionality like S3 does not exist, but you can easily integrate MinIO and make use of the shared nothing cloud native ready architecture of vSAN.

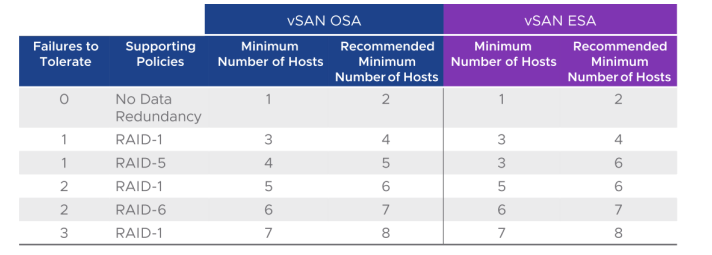

You need at least one ESXi host to build up a vSAN datastore. Just one host would mean no redundancy. If you want at least one failure to tolerate, you require at least 3 hosts.

In the OSA (original storage architecture) layout, any contributing host can have up to 5 disk groups. One disk group consists of 1 cache and up to 7 capacity disks. So a total of max 40 disks / server. Overall, up to 64 servers per cluster.

The new express storage architecture (ESA) relies on SDDs only and limits a single server to 24 disks, all contributing to the capacity tier. No more cache disks required. At a minimum, 2 NVMe disks. A small drawback is that deduplication is not available in ESA yet.

But vSAN became more feature rich from time to time. An absolute stunner was the introduction of stretched clusters, meaning you could span vSAN clusters over Layer-3 and two availability zones to increase availability.

This also meant the introduction of a separate witness host that plays tiebreaker to ensure the majority can vote and clearly determine who is the current master and still available.

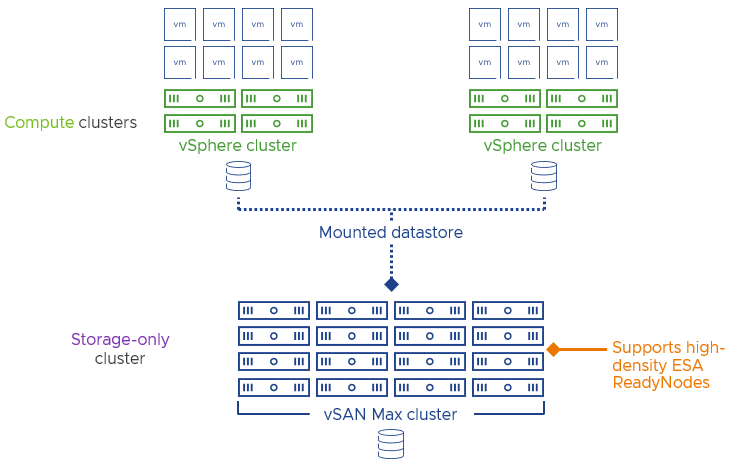

Furthermore, it became possible for a host to mount a vSAN datastore from a separate cluster even without having vSAN itself.

A more recent feature is called vSAN Max in the new ESA (Express Storage Architecture) and has the answer to some challenges in the HCI architecture. Offering the possibility to form vSAN clusters that exclusively provide vSAN storage to other clusters. Thus reducing HCI limitations to scaling storage and compute independently.

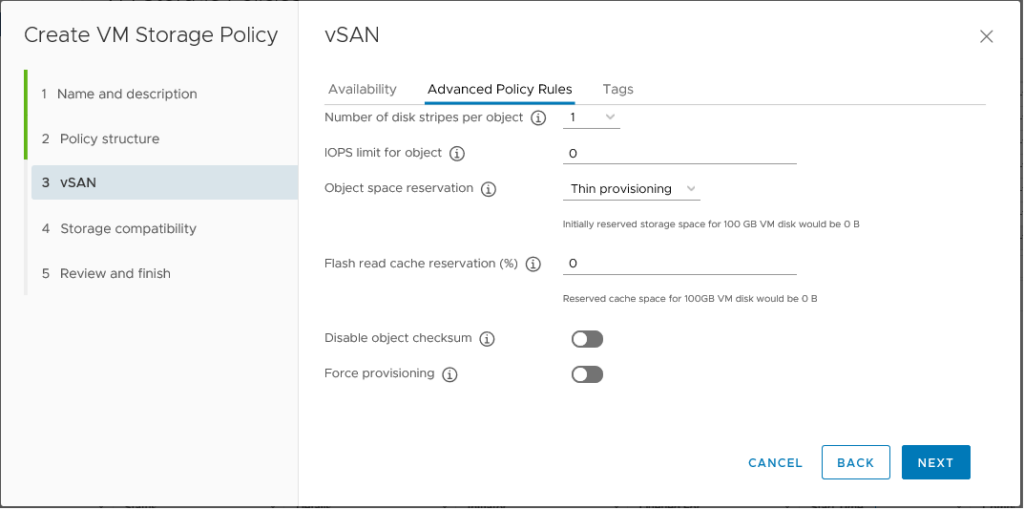

An overall feature that greatly increases manageability is the storage policy-based management. Basically, you can determine different placement rules by a set of high-level requirements like FTT (failures to tolerate) and the mechanism (RAID-1, 5 or 6), basically a trade of between efficiency and performance.

Each VM-Disk can have its policy — whether no mirror, or different levels of redundancy.

Furthermore, you can constrain each disk’s throughput and ensure useful transfer limits for deprioritized workloads.

In addition, compression and deduplication can be enabled at vSAN datastore level. Data at rest encryption and in transit is also possible to configure. Requires a key provider, out of the box NKP (native key provider) in vCenter or a generic KMS (Key Management System) is required.

Basically, the whole backend storage traffic communicated via. Ethernet. This makes it an easy to implement solution, you can utilize dedicated network cards or ports for the vSAN traffic. Other technologies like FC are not supported. Moreover, mapping vSAN NFS file shares to other ESXi hosts is not supported.

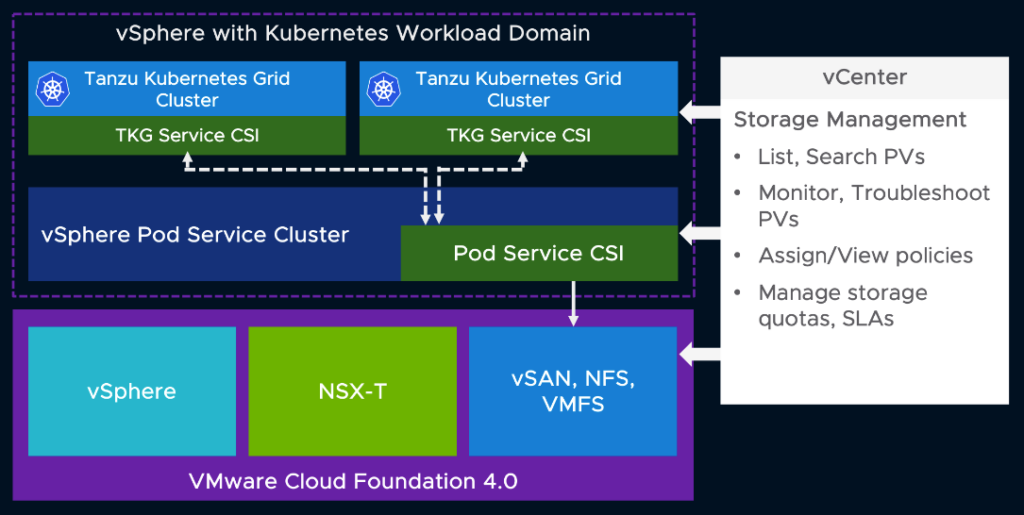

But not only block storage for VM-disk placement is possible. vSAN also offers NFS or CIFS file shares for workloads, especially for Kubernetes. This is a valuable and fully integrated way.

This means that guest operating systems can consume and share file storage via. vSAN. This feature is especially relevant for container workloads that require PVCs (persistent volumes claims) type RWX (read write many).

Why is it so special?

vSAN makes it possible to achieve the highest levels of performance and availability without compromising cost and space.

Because of its flexible design, it makes it easy to combine storage and resolve problems automatically, which helps reduce business risk. In the event of a (disk) failure, an intelligent redistribution of degraded blocks is initiated, and the vSAN policy is enforced, resulting in the prompt compliance of objects.

Because it works well with vSphere, it helps make things easier. For example, it works well with site recovery manager, which is a great way to recover from disasters. Moreover, into the Aria automation suite, which enables a service catalog and orchestrated deployment of classical vm and cloud native workloads through a CI/CD pipeline.

Even zero RPO (Recovery Point Objective) & fast RTO (Recovery Time Objective) is achievable with stretched clusters. Not only supporting availability but also security through its various features like datastore encryption which secure against theft.

Furthermore, the supporting material like the documentation, support services, and training offered by VMware by Broadcom are always well-structured and motivate to learn and dive deeper into the technology. This increases technology adoption and reduces the risk of design flaws and misconfiguration.

To make things easier for businesses, this technology can save up to 40% of their money on starting and running costs. (VMware ESA TCO Reduction PDF)

It’s also a good place for new ideas like cloud-based services that are easy to take care of and can be automated.

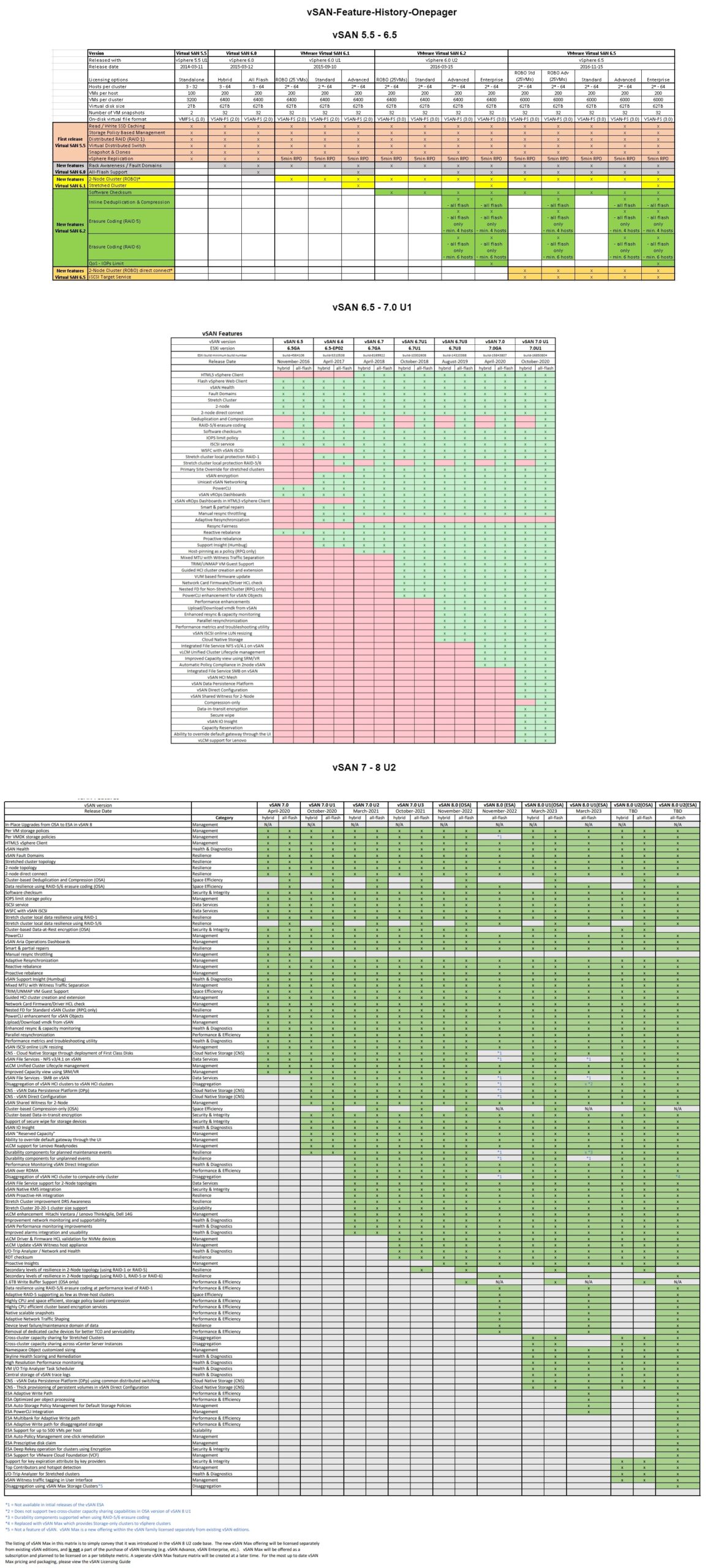

Feature History Overview (Click to open in new tab)