AI has transcended the chasm and become a ubiquitous force, with its potential far from exhausted. In fact, it’s just the beginning. Profiting from AI guidance and creativity is within our grasp, and the impending productivity explosion promises solutions to complex problems. This article introduces VMware’s Private AI Foundation, a groundbreaking solution offering a private, scalable, and reliable approach to accelerating AI adoption for enterprises.

Understanding the need for private AI

While various AI providers simplify adoption for enterprises, a significant caveat exists. The models they provide are often opaque—literal black boxes with closed-source code. This lack of transparency poses a threat to enterprises, as sensitive data becomes vulnerable. Sharing company data to derive valuable outputs for solving intricate issues becomes a precarious venture with these services.

A notable example of this challenge arose when Samsung inadvertently leaked trade secrets through the use of ChatGPT. For more information, refer to the article linked below:

“Whoops, Samsung workers accidentally leaked trade secrets via ChatGPT”

ChatGPT doesn’t keep secrets

The challenge of our era is to safeguard confidential information, maintaining compliance and security. Additionally, empowering Business and IT to adopt new AI solutions in an agile manner while considering scalability, cost, and performance requirements is crucial.

Creating on-premises AI models may seem like a straightforward solution, but the requirements for private AI models are extensive and expensive. Massive amounts of data necessitate substantial AI co-processing power, typically provided by graphic processing units (GPUs). Automation becomes essential to avoid jeopardizing AI gains through inflexible and error-prone solutions.

Layers of Generative AI

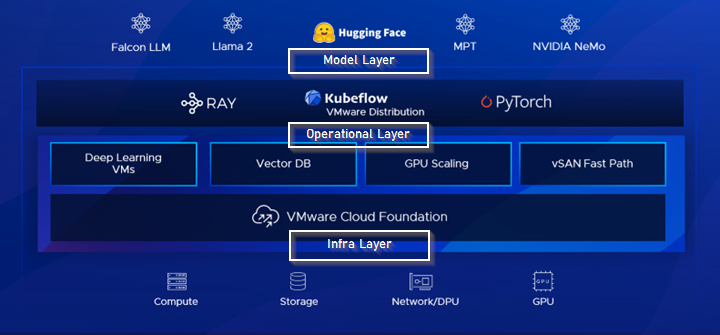

Discussing generative AI involves understanding four essential layers working in harmony to bring private AI to life:

- Infrastructure Layer: Comprising graphic acceleration (GPUs/FPGAs/ASICs), storage, and networking, this layer builds a supported, interoperable, and high-performance resource stack.

- Model Layer: This layer involves specific models optimized for industries and comes in varying sizes (S/M/L), either closed or open source.

- Operational Layer: Focused on deploying models responsibly, it requires new vector databases and API orchestration to integrate models effectively.

- App Layer: Concerned with user experience and SaaS apps, this layer supports the consumption of use-case-specific gen-AI services.

Architecting VMware Private AI

At the core of this revolutionary solution is VMware Cloud Foundation (VCF), which establishes the infrastructure layer. VCF, optimized for AI workloads, collaborates with NVIDIA to fractionalize GPUs into isolated parts, enhancing efficiency and security in consuming accelerators.

AI workloads are dynamic and resource demanding. In VCF, you can group up to 16 GPUs into a single virtual machine, optimizing it for model training.

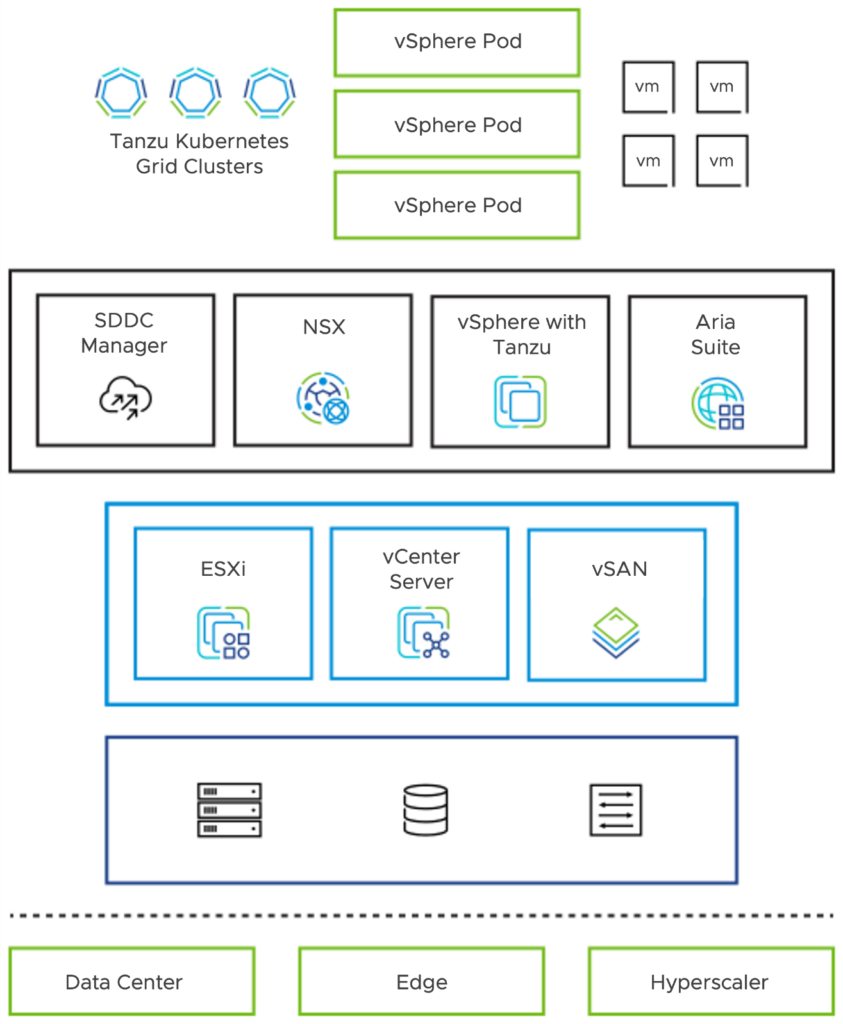

The VCF stack is a versatile turnkey solution, running in data centers, edge locations, or hyperscale environments. Embracing the multi-cloud trend, VCF provides a unified tool set across diverse clouds.

In the standard VCF architecture, a management domain hosts all infra services like NSX-T managers, SDDC manager, and more to highly automate the virtual infrastructure. In the VI workload domain, you provide the infrastructure for the workloads, such as gen-AI VMs or containers.

Per VCF instance, you can scale up to 14 VI workload domains, all managed through a single pane of glass. Furthermore, you can define multi-availability zone stretched clusters to offer the highest uptime in case of a disaster.

These clusters consist of multiple ESXi hosts with VI workload domain-specific hardware configurations to fulfill specific requirements, such as NVME-only ESXi hosts using the new ESA vSAN architecture for optimal storage throughput and minimal latency—ideal for AI applications.

Overall, VCF serves as the validated bridge for physical data center resources, facilitating a unified platform for all forms of legacy and modern applications to coexist seamlessly in the locations you choose.

The release of VMware for Private AI Foundation with NVIDIA

While the reference architecture already exists, the unified solution’s availability is expected in Q1 2024, stay tuned.